Django Import-Export introduction

Based on the commercial experience of building web applications, I can say that uploading data from a file is one of the basic features. Usually, the Django Import-Export library is a good fit for this kind of request.

It supports multiple formats, including xls, csv, json, yaml, and all other formats supported by Tablib. It also has a Django Admin integration, which is really convenient to use.

Integration with Django Admin is the easiest implementation of this library.

In your admin.py file you just need to replace ModelAdmin with ImportExportModelAdmin class:

from django.contrib import admin

from import_export.admin import ImportExportModelAdmin

from .models import Car

@admin.register(Car)

class CarAdmin(ImportExportModelAdmin):

pass

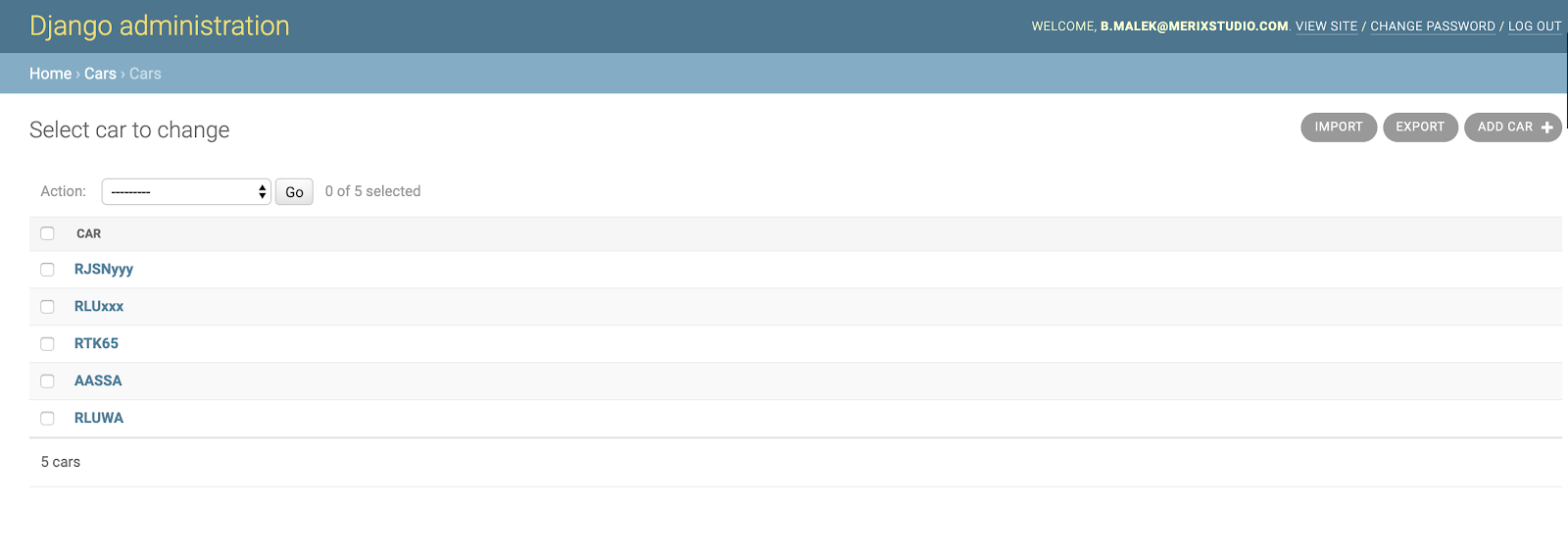

And here you go:

Import and Export buttons appeared in the upper right corner.

Easy, right? If you don’t want to use both import and export feature, instead of inheriting from ImportExportModelAdmin, use either ExportMixin or ImportMixin. How simple is that!

Integration of the Django Import-Export in Django Views

It is also possible to use it in every Django view or with DRF. The Django Import-Export library works with the concept of Resource class, which is very similar to the Django Model Forms.

from departments.models import Department

from import_export import resources, fields

from import_export.widgets import ForeignKeyWidget

from .models import Car

class CarResource(resources.ModelResource):

department = fields.Field(

column_name="department",

attribute="department",

widget=ForeignKeyWidget(Department, "short_name"),

)

class Meta:

model = Car

fields = (

"id",

"registration_no",

"brand",

"model",

"passengers_no",

"weight",

"production_date",

"department",

)

This is a basic Resource example. To be honest, that kind of simple definition is rare in real-life projects. The complication comes with the more complex models.

Take a look at a more advanced Resource class with the One-2-One field with the User model. Allowing import of Employee instances with creating linked User models at the same time.

resources.py

from import_export import resources

from .models import Driver, User

class ImportResource(resources.ModelResource):

def init_instance(self, row, *args, **kwargs):

instance = super().init_instance(*args, **kwargs)

email = row.get("email")

first_name = row.get("first_name")

last_name = row.get("last_name")

phone = row.get("phone")

user, created = User.objects.get_or_create(

email=email, first_name=first_name, last_name=last_name, phone=phone,

)

user.role = user.EMPLOYEE

user.save()

instance.user = user

return instance

class Meta:

model = EMPLOYEE

fields = (

"id",

"user",

"email",

"first_name",

"last_name",

"phone",

"country",

"city",

"street",

"zip_code"

)

This is how an example view can look like:

views.py

from django.http import HttpResponse

from django.views.decorators.csrf import csrf_exempt

from django.utils.translation import ugettext_lazy as _

from rest_framework import status

from tablib import Dataset, core

from .resources import CarResource

@csrf_exempt

def upload_csv(request):

if request.method == "POST":

car_resource = CarResource()

dataset = Dataset()

new_cars = request.FILES["file"]

try:

dataset.load(new_cars.read().decode("utf-8"), format="csv")

except core.InvalidDimensions:

return HttpResponse(

_("Your csv file is incorrect."), status=status.HTTP_400_BAD_REQUEST

)

try:

result = car_resource.import_data(dataset, dry_run=True, raise_errors=True)

except Exception as e:

return HttpResponse(e, status=status.HTTP_400_BAD_REQUEST)

if not result.has_errors():

car_resource.import_data(dataset, dry_run=False)

return HttpResponse(status=status.HTTP_200_OK)

else:

return HttpResponse(status=status.HTTP_405_METHOD_NOT_ALLOWED)

In this view, we perform import_data() function from the Django Import-Export library with a dry_run flag set to true first. Thanks to this, we check the correctness of the data in the file and in case of an error the data is not saved to the database.

And that's it! With little time and even less effort, we have working functionality which allows us to upload data from files and also shows file's construction errors and data errors.

Testing using big data files

Alright, while uploading the small file everything works just brilliant. Let’s check how our functionality behaves with a large amount of data, for instance, thousands of records in one file.

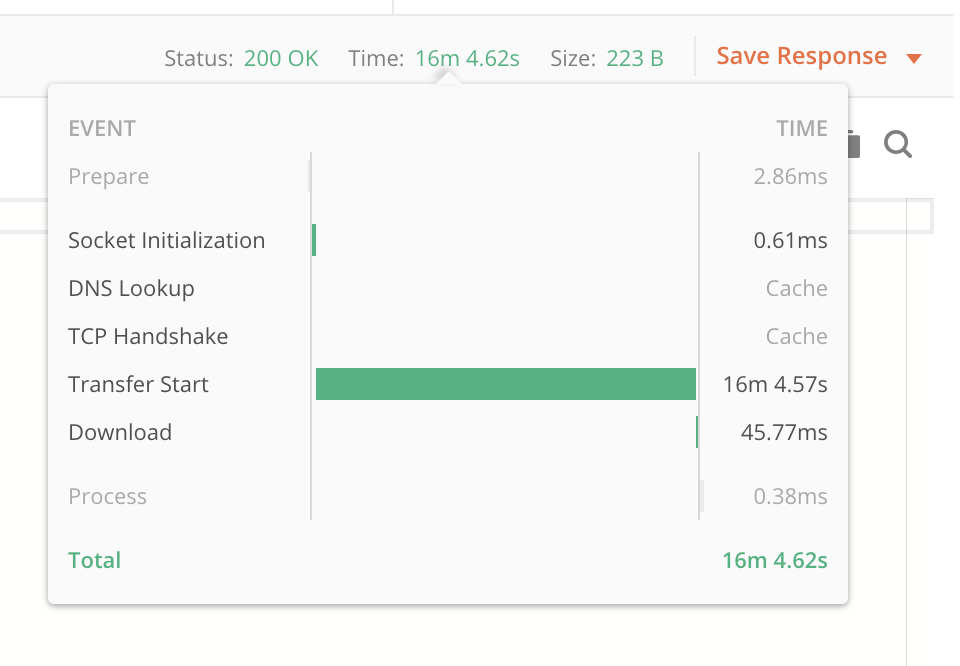

For my testing purposes, I am going to use .csv file. The so-called CSV (Comma Separated Values) format is the most common import and export format for spreadsheets and databases. I have uploaded the csv file with 5000 records and …it took 16 minutes!

Solutions:

A. Move import function to celery task (it's always a good idea to conduct any sort of import/export tasks outside of the request-response cycle);

B. Use WebSockets (no thanks, we want to make our lives easier, no need to complicate things more than necessary);

C. Sit down and cry ;).

I’ll go with option D. - build your own script !!!

Custom python script

In my custom import csv files script, the CSV module of Python’s Standard Library should be enough. The CSV module’s reader and writer objects read and write sequences. You can also read data in the dictionary form using the DictReader class. A simple example of usage below:

imports.py

import csv

from django.core.files.storage import default_storage

from django.db.transaction import atomic

@atomic

def import_process(file_name):

file = default_storage.open(file_name)

decoded_file = file.read().decode("utf-8").splitlines()

reader = csv.DictReader(decoded_file)

You can iterate over every row in the DictReader instance and insert data into Car’s particular fields:

for row in reader:

car = Car(

registration_no=row["registration_no"],

brand=row["brand"],

model=row["model"],

weight=row["weight"],

production_date=row["production_date"],

passengers_no=row["passengers_no"],

)

I have also saved some data from the reader in the different lists and dictionaries. I am going to use it later for data validation:

department_names.append(row["department"])

registration_numbers.append(row["registration_no"])

cars[row["registration_no"]] = car

Before saving objects in the database I am going to check whether the data provided in the file is correct.

Notice that the Import function from the Django Import-Export library terminates and returns an error message when it encounters the first error in the file. With our custom import script, you can check if all data in the file is correct, then collect all errors that appear and return them in just one response at the end. Definitely, it makes more sense considering user experience.

Below is an example function checking whether departments' short names provided in the file match the departments in our database. Depending on your needs, you can check the correctness of the data in all rows of the uploaded file.

imports.py

def check_departments(department_names: List[str]) -> Optional[str]:

old_depots = set(

Department.objects.values_list("short_name", flat=True)

)

if difference := set(department_names) - old_depots:

return f"Following Departments don't exist: {difference}"

If no errors occurred we can finally create objects in the database:

try:

Car.objects.bulk_create(cars.values())

except Exception as e:

return HttpResponse(e, status=status.HTTP_400_BAD_REQUEST)

return HttpResponse("Your file has been successfully uploaded", status=status.HTTP_200_OK)

Please notice I have used the bulk_create() method here. Django bulk_create() makes only 1 query, no matter how many objects are there.

Benchmark the performance

It is time to check how my custom import method works and what is the response time. I will use the same csv file with 5000 records for testing.

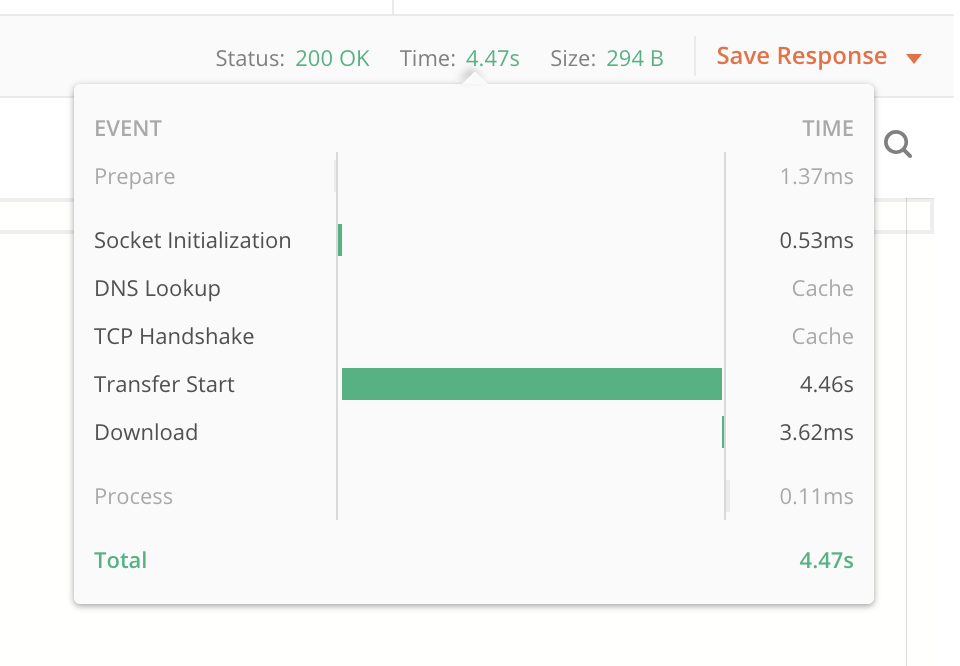

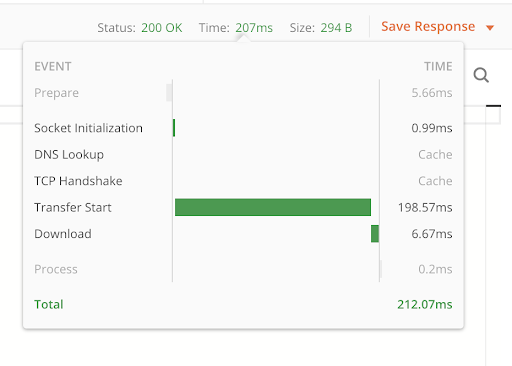

My custom script response time:

Django Import-Export import_data() response time:

The time difference is significant. With the simple python script, I was able to decrease processing time from 16,45 minutes to just 4,47 seconds! It's a huge improvement. Why is that? Mostly, (among the other things) because of using bulk_create() (in my script), instead of creating each instance individually (in Django Import-Export). For more information, I encourage you to check the source code of the Django Import-Export library, to learn how it works.

The sample application created for this article is available on my GitHub.

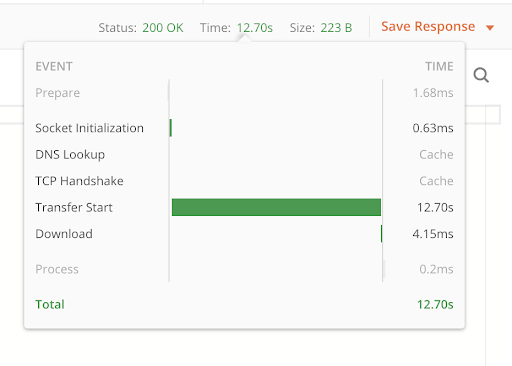

To be sure I’ll check if the difference is also noticeable in the case of upload a smaller file(about 30 records):

My custom script response time:

Django Import-Export import_data() response time:

Alright, the difference is big, but still 12,7 seconds it's not a very bad result and could be acceptable in some cases.

In the case of applications where the uploaded files will not contain a large number of records and are not the core feature, you can consider using the Django Import-Export library due to the speed and ease of development and the simplicity of Django Admin integration.

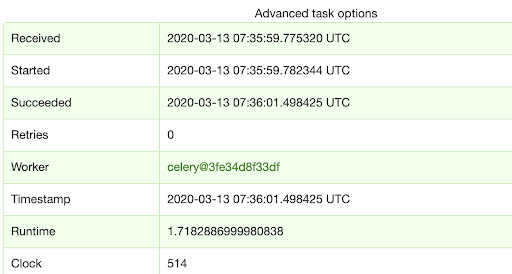

As I mentioned before it is always a good idea to move import features to asynchronous tasks. The response is immediate and you can send the import results by email or use WebSocket to deliver notifications within the app.

I moved the import_data() function to the Celery task and uploaded the same big data file with 5000 car records. It took 1,7 seconds. Great, right? Take a look at Flower report of my Celery task:

Django Import-Export vs custom script?

In my article, I am not trying to convince you to use one method over the other. There is no single answer. You need to choose your own approach to solve the problem because like everything in our life - it depends. Here's a little summary that might help you to choose.

The Django Import-Export Custom script slow fast some customization available fully customizable fast to develop takes a little more effort to develop Django Admin integration you need to write your own supports multiple formats at the same time you can choose which file format to support

returns only the first error found

catches and returns all errors that the file contains in one request

Like what our Python developers do? Check our job offers and join them!

Navigate the changing IT landscape

Some highlighted content that we want to draw attention to to link to our other resources. It usually contains a link .

.svg)

.webp)